PURSUhInT: Knowledge Distillation Framework

In Search of Informative Hint Points Based on Layer Clustering

Overview

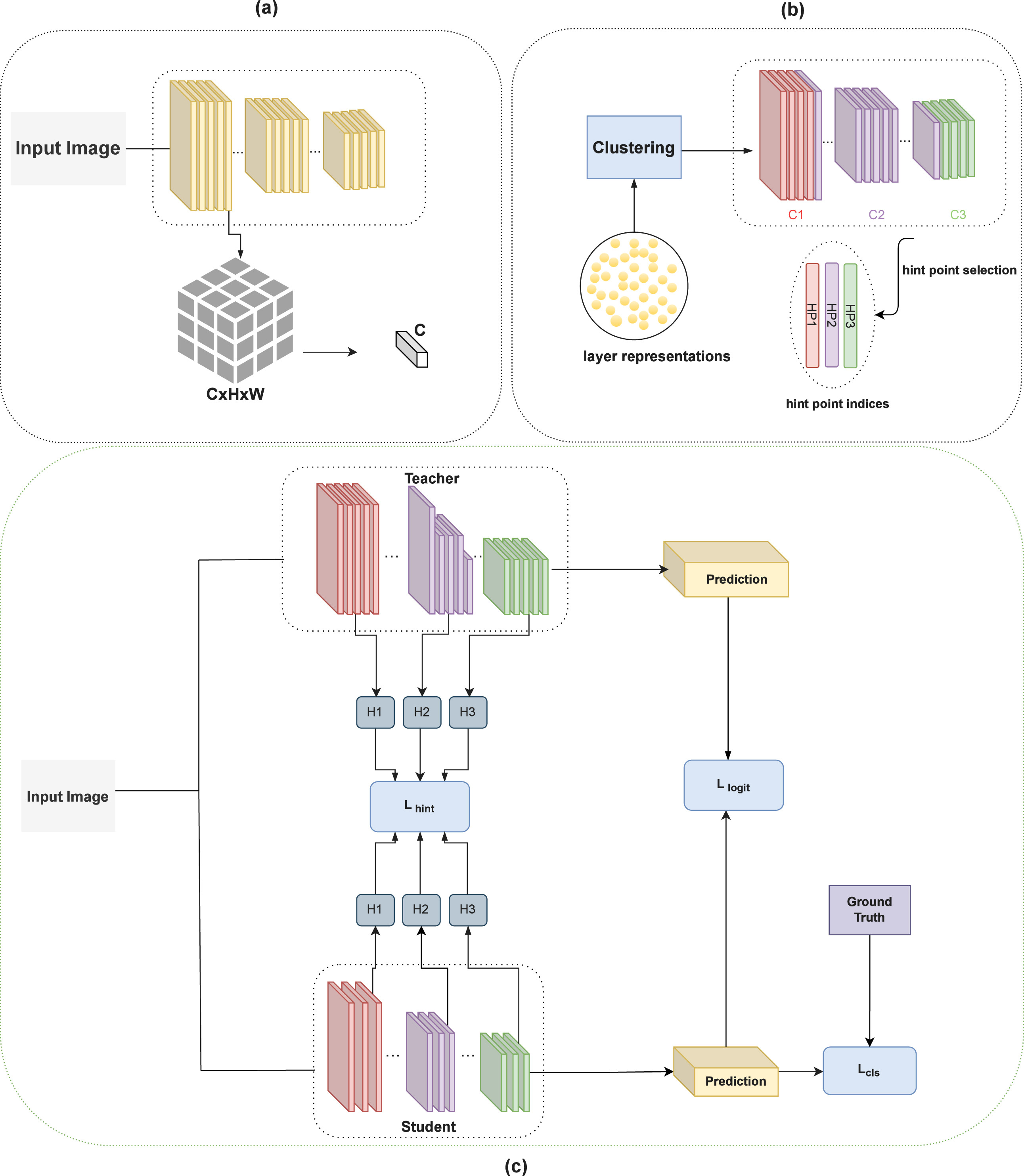

PURSUhInT introduces a novel approach to knowledge distillation by identifying informative hint points based on layer clustering, enabling efficient transfer of knowledge from large teacher models to smaller student models.

The framework achieves significant model compression (up to 2.5x) while maintaining minimal accuracy loss. By carefully selecting which intermediate representations to transfer, PURSUhInT outperforms traditional knowledge distillation methods on multiple benchmark datasets.

Methodology

(a) Feature Extraction

The framework begins by extracting features from input images through successive neural network layers. These layers progressively reduce spatial dimensions while increasing feature depth, creating rich representations that capture hierarchical patterns in the data.

(b) Hint Point Selection

Layer representations are clustered to identify the most informative features. The clustering algorithm groups similar layer activations, and from these clusters, specific hint points (HP1, HP2, HP3) are selected. These hint points represent the most valuable intermediate representations for knowledge transfer.

(c) Knowledge Distillation Training

The Student model is trained using three loss components: L_hint (matching intermediate features with Teacher), L_logit (matching final predictions with Teacher), and L_cls (matching predictions with ground truth). This multi-objective approach ensures the Student learns both from the Teacher's knowledge and the true labels.

PURSUhInT Framework

The PURSUhInT framework showing (a) feature extraction, (b) hint point selection through clustering, and (c) knowledge distillation training with Teacher-Student architecture.

Key Contributions

2.5x Model Compression

Achieve significant reduction in model size while preserving performance.

Layer Clustering

Novel clustering approach to identify the most informative layers for knowledge transfer.

Informative Hint Points

Automatic selection of optimal hint points for efficient knowledge distillation.

Minimal Accuracy Loss

Maintains high accuracy even with significant model compression.

Technologies Used

Publication

Expert Systems with Applications (Impact Factor: 8.5)

PURSUhInT: In Search of Informative Hint Points Based on Layer Clustering for Knowledge Distillation. Volume 213, 2023.

View PaperInterested in Model Optimization?

Let's discuss efficient deep learning and model compression techniques.

Get in Touch